Jaan Mannik – Director of Commercial Sales

In my last blog post, What is the Workhorse Advancing HPC at the Edge?, I highlighted how enterprise applications requiring the highest end compute for their AI workloads at the Edge are leveraging data-center grade NVIDIA GPUs to get even greater performance. Processing and storing data closer to where the action takes place means a decision can be made more quickly, producing reduced latency, improved security, greater reliability, and much higher performance. In this blog, I’ll be covering the transition from using big power-hungry GPUs to smaller form factor electronic control units, better known as ECUs, at the very edge.

GPUs have traditionally been the workhorse for training and retraining AI models, thanks to their massive parallel architecture designed for general purpose computing. Training an AI model typically involves processing vast amounts of data through complex mathematical operations to adjust the model parameters, essentially teaching it to make more accurate predictions or classifications over time. Enterprise class NVIDIA GPUs like the Gen4 A100 or new Gen5 H100 are equipped with specialized libraries like CUDA and cuDNN that optimize AI/ML frameworks for GPU acceleration, ensuring that AI developers can take advantage of the full potential of their GPUs.

Retraining AI models is also extremely important for creating better model accuracy and relevance over time. When new data becomes available, retraining is essential to adapt the model to changing circumstances. Retraining AI models typically requires less computational resources than the initial training because the new datasets are smaller and the model is more optimized. This kind of logic can be applied to real life applications as well. For example, think of a baseball pitcher in high school who throws a great fastball (I’m reliving my glory days for a moment). To teach them to throw a curveball, the coach will teach them new way to grip the baseball, so the ball breaks across the plate. The pitcher doesn’t need to be taught how to push off the mound and release the ball toward the catcher because he’s already learned how to do that. It’s a new, small dataset applied to an existing model (or pitcher), which is retrained to throw fastballs AND curveballs.

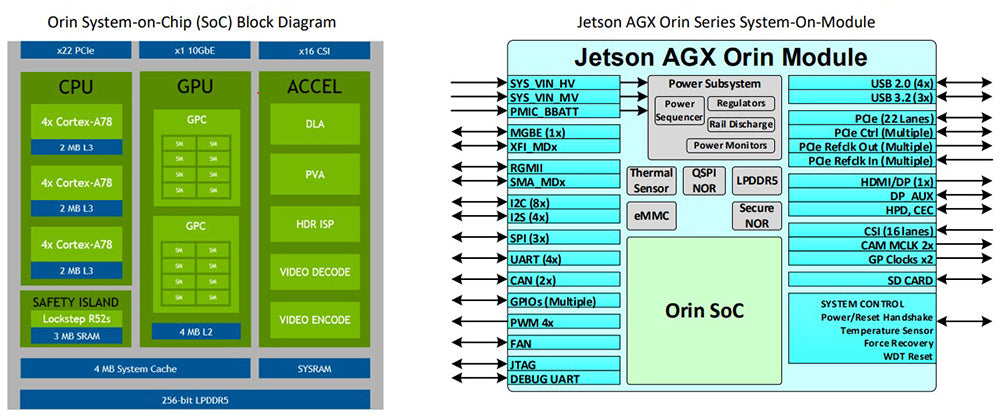

Applying the above logic to seasoned AI models which are ready for production environments at the edge is why the electronic control unit (ECU) is so important. There is still a need for some real-time processing in addition to efficient power consumption, ruggedization, size, weight, and cooling due to the non-traditional environments of edge computing applications. Those big power-hungry NVIDIA© A100 & H100 GPUs used initially to train models in a datacenter are no longer suitable, and can be replaced by specialized ECUs like NVIDIA Orin. NVIDIA Orin modules, such as JetsonTM AGX Orin module, are highly integrated and powerful system-on-chip (SoC) modules specifically designed to address the limitations of non-traditional edge environments mentioned above. Each ECU combines multiple Arm CPU cores, NVIDIA’s custom-designed GPU cores, dedicated hardware accelerators for AI/ML learning tasks, and a variety of edge relevant I/O interfaces into a single compact power-efficient package, making it an idea solution for edge devices with constrained resources. Connecting multiple ECUs together can increase performance and also introduce redundancy, which is extremely important for edge applications like autonomous driving, robotics, smart cities, etc.

In conclusion, the shift from GPUs to ECUs like NVIDIA Orin in edge computing reflects the evolving demands of modern applications. The move towards power efficient, compact, and integrated solutions as been driven by the need for real-time processing, low latency, and AI capabilities at the very edge. As technology continues to advance, ECUs are likely to play an increasingly pivotal role in shaping the future of edge computing.

Click the buttons below to share this blog post!

By: Jaan Mannik – Director of Commercial Sales

The term AI, or Artificial Intelligence, is everywhere nowadays and has quietly woven itself into the fabric of our daily lives. It powers the recommendations we see on streaming platforms, the navigation apps that guide us through traffic, and even the virtual assistants that answer our questions in seconds. From optimizing energy use in smart homes to predicting market shifts in finance, AI has become the invisible engine driving convenience, efficiency, and insight across industries.

In manufacturing, AI-driven robots collaborate with humans to streamline production. In agriculture, machine learning models monitor crops, forecast yields, and conserve resources. Retailers use predictive analytics to anticipate consumer needs before customers even express them. The reach of AI is no longer confined to futuristic labs, it’s in our phones, vehicles, and cities, constantly learning and adapting to serve us better.

OSS PCIe-based products deliver critical advantages for modern military sensor systems by enabling real-time data acquisition, processing, and transmission in rugged, mission-critical environments. These benefits stem from their ability to support high-bandwidth, low-latency interconnects, modular scalability, and environmental resilience, all of which are essential for today’s advanced military platforms.

Companies today are being asked to do more with data than ever before. Bigger AI models, faster insights, and workloads that don’t stay in one place, it’s a lot to keep up with. Traditional infrastructure just isn’t built for this kind of speed and flexibility.

The answer isn’t about throwing more hardware at the problem. It’s about building smarter, more agile infrastructure that adapts as demands change. And that’s where scale-out and increasingly, a blend of scale-out and scale-up come into play.