By Jaan Mannik, Director of Commercial Sales

Edge computing is becoming increasingly important due to the rapid growth of the Internet of Things (IoT), and the need for real-time data processing and analysis. Edge computing involves processing and storing data at the edge of the network, closer to where it is generated, rather than in a centralized location. Conducting these functions closer to where the action takes place means a decision can be made more quickly, producing reduced latency, improved security, greater reliability, and higher performance. This concept is becoming adopted by a wide range of market verticals today, however, edge locations are difficult to optimize and often require reduced physical footprints, non-traditional power & cooling envelopes, and unique rugged design concepts. Enterprise applications requiring the highest end compute for their AI workloads at the Edge are leveraging data-center grade NVIDIA GPUs to get even greater performance. In this blog, I’ll be covering the workhorse advancing HPC at the edge, and how it is being deployed in the real-world AI Transportable applications of today.

The workhorse advancing HPC at the Edge is the GPU (graphics processing unit), by providing a powerful and efficient way to process large amounts of data in real-time. Using GPUs for parallel computing operations is not a new concept. Supercomputers started using GPUs in traditional rack-scale environments back in 2005, when Oak Ridge National Laboratory's Jaguar supercomputer became the first to incorporate GPUs for scientific computing, specifically using GPUs from NVIDIA. The use of GPUs in supercomputers increased in popularity in the following years, due to their ability to perform massive amounts of parallel processing, making them a perfect candidate for high-performance computing applications, such as simulations and modeling, because they can perform many operations simultaneously. As of June 2021, seven of the top ten world's fastest supercomputers use GPUs to accelerate their computations, including the top ranked Fugaku in Japan.

The workhorse advancing HPC at the Edge is the GPU (graphics processing unit), by providing a powerful and efficient way to process large amounts of data in real-time. Using GPUs for parallel computing operations is not a new concept. Supercomputers started using GPUs in traditional rack-scale environments back in 2005, when Oak Ridge National Laboratory's Jaguar supercomputer became the first to incorporate GPUs for scientific computing, specifically using GPUs from NVIDIA. The use of GPUs in supercomputers increased in popularity in the following years, due to their ability to perform massive amounts of parallel processing, making them a perfect candidate for high-performance computing applications, such as simulations and modeling, because they can perform many operations simultaneously. As of June 2021, seven of the top ten world's fastest supercomputers use GPUs to accelerate their computations, including the top ranked Fugaku in Japan.

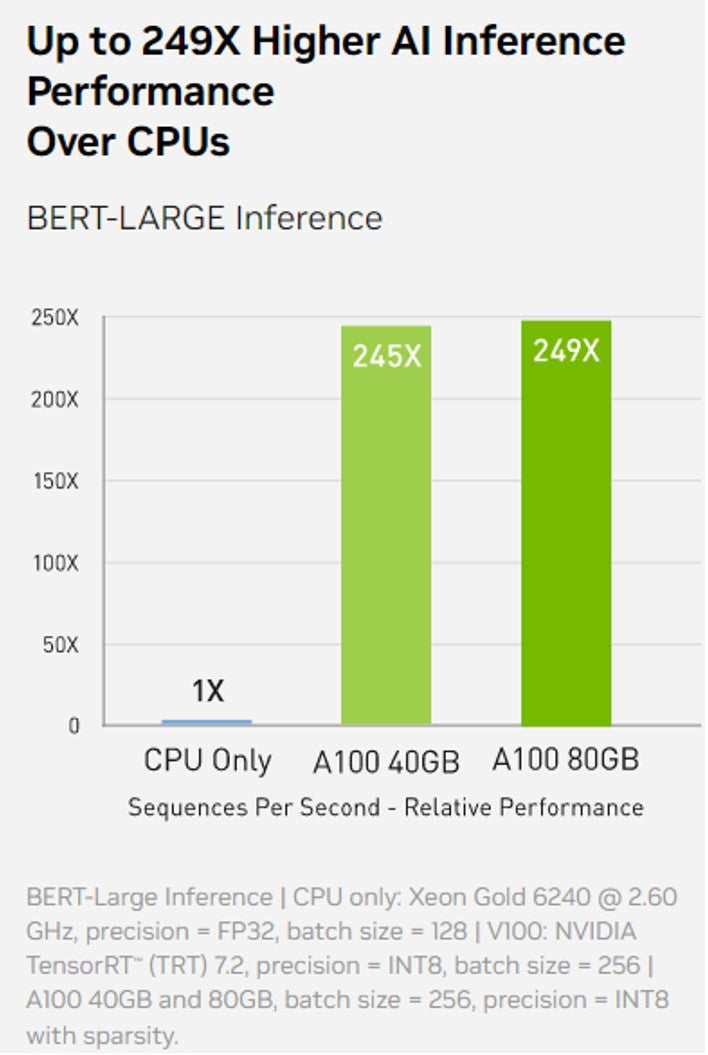

So why is the GPU advancing HPC at the Edge? A single NVIDIA A100 80GB GPU can greatly increase the performance, compared to a CPU, and requires a much smaller footprint. As NVIDIA explains “On state-of-the-art conversational AI models like BERT, A100 accelerates inference throughput up to 249x over CPU.” This makes GPUs ideal for high-performance computing at the Edge, where fast processing is essential to ensure timely decision-making and response. A single 3U rugged server supporting 4 NVIDIA A100 GPUs can replace an entire rack of CPU-based servers traditionally found clustered in a datacenter, which is a game changer for Edge applications where size, space, power, and cooling are problematic. For example, in autonomous vehicles, GPUs can process sensor data and make real-time decisions to control the vehicle. In agriculture, GPUs can identify weeds to eradicate to help crops grow without using pesticides. In healthcare, GPUs can analyze medical images in real-time to provide more accurate diagnoses. One Stop Systems 3U SDS A100 GPU Server is an NVIDIA Certified rugged HPC platform being deployed in these environments today, supporting up to four A100 GPUs and 500TB of NVMe flash storage, in a 3U compact chassis, optimized for the very edge.

Overall, edge computing can help organizations improve their operational efficiency, reduce costs, and improve the performance and reliability of their applications. Adding enterprise-class GPUs brings the power of the data center to the very Edge, by providing a powerful and efficient way to process large amounts of data in real-time where it is most needed. This ‘workhorse’ is an incredibly important technology that is pushing the limits of Edge computing, enabling new and innovative applications that were not possible before.

Click the buttons below to share this blog post!

As we bring another successful year to a close, we at One Stop Systems (OSS) are incredibly proud of the progress we’ve made — progress that directly translates into greater capability and success for you, our valued customer. We’ve expanded our product portfolio and deepened our technical expertise, driving measurable impact across both Commercial and Defense markets.

By: Jaan Mannik – Director of Commercial Sales

The term AI, or Artificial Intelligence, is everywhere nowadays and has quietly woven itself into the fabric of our daily lives. It powers the recommendations we see on streaming platforms, the navigation apps that guide us through traffic, and even the virtual assistants that answer our questions in seconds. From optimizing energy use in smart homes to predicting market shifts in finance, AI has become the invisible engine driving convenience, efficiency, and insight across industries.

In manufacturing, AI-driven robots collaborate with humans to streamline production. In agriculture, machine learning models monitor crops, forecast yields, and conserve resources. Retailers use predictive analytics to anticipate consumer needs before customers even express them. The reach of AI is no longer confined to futuristic labs, it’s in our phones, vehicles, and cities, constantly learning and adapting to serve us better.

OSS PCIe-based products deliver critical advantages for modern military sensor systems by enabling real-time data acquisition, processing, and transmission in rugged, mission-critical environments. These benefits stem from their ability to support high-bandwidth, low-latency interconnects, modular scalability, and environmental resilience, all of which are essential for today’s advanced military platforms.

Rein Mannik

June 02, 2023

Impressive and convincing the need for having information available so that decisions can be earlier. Almost like integrating some analogue computing with digital computing.