|

|

Special Guest BloggerSeamus Egan, Vice President Government Integrated Solutions at TMGcore, Inc. |

Introduction

The past two decades have seen an exponential growth in data generated because of increased digitization of the business processes, instrumentation of devices, and the need to capture, collect and process vast quantities of unstructured data, such as video and images. This evolution has resulted in the development of new programming languages, new data storage architectures, and rapid advances in the infrastructure and chip architectures required to transform these new aggregated data sets into business insight and competitive advantage for businesses and governments. Chip manufacturers such as Intel, AMD, and NVIDIA are responding to this market demand by developing larger and more complex silicon architectures, including some with embedded high-bandwidth memory. Monolithic chip architectures are being replaced by multi-tile designs with 3-D stacking, delivering significant performance gains and design platforms, which will allow flexible configurations tailored to the business workloads. A challenging byproduct of the development is increasing amounts of heat generation, which is taxing the limits of traditional air-cooled deployments, and threatening to limit the performance of these advanced CPU and GPU systems.

High Density in the Core Data Center versus the Edge

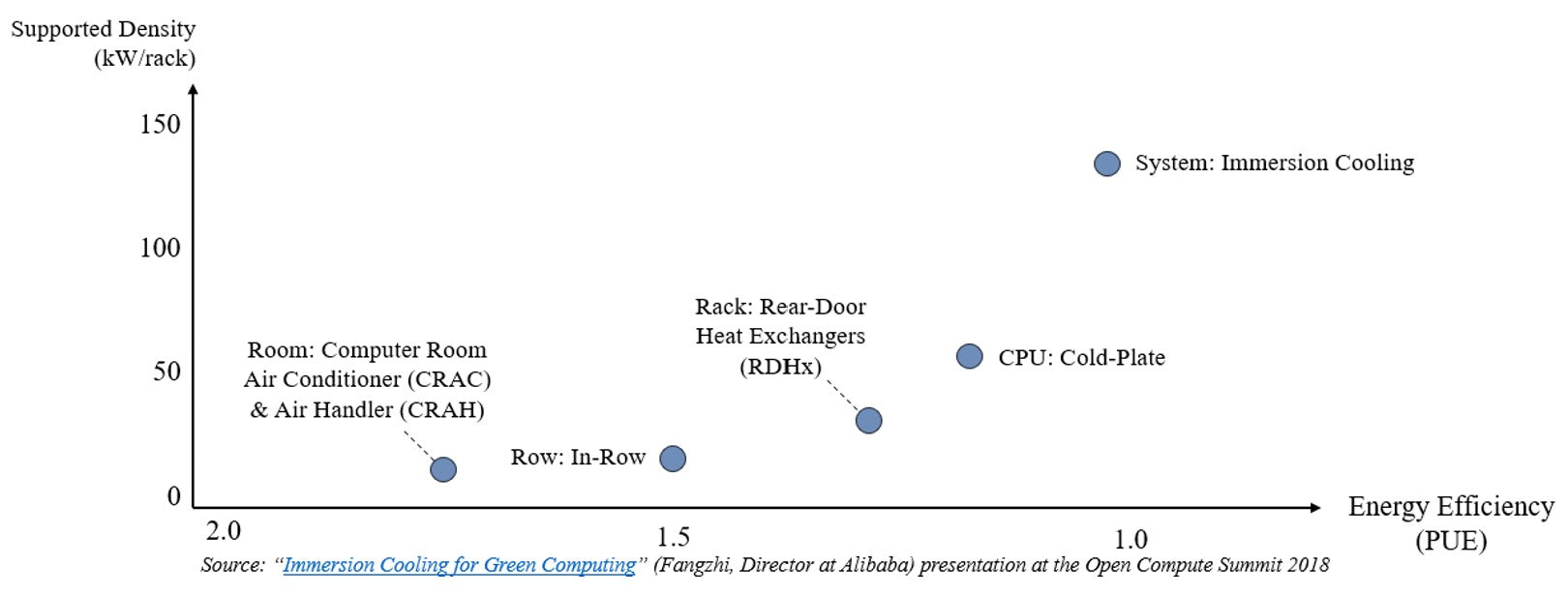

Traditional data centers are adapting to mitigate these increasingly dense workloads by reducing rack densities, supplementing cooling with rear door heat exchangers, and in some cases, leveraging direct-to-chip cooling with its associated cooling distribution units. Server architectures are moving rapidly from 2U to 4, 6, and 8U rack sizes to accommodate the increased heatsink sizes and the enlarged fan systems required to dissipate the heat generated from the systems. Power Usage Effectiveness (PUE) which is a ratio of the power consumed by compute equipment as a function of the total energy (compute + overhead to run compute) is impacted negatively due to the increased energy required to air-cool these systems. Customers and data center operators can do a cost benefit analysis to determine the best balance of cooling strategies given the constraints of their data centers. Ultimately, data center designs will morph from air cooled to hybrid cooling designs, and finally to high efficiency low-PUE advanced cooling facilities aligned with the silicon roadmaps. This transformation will be accelerated by the Real Estate Investment Trusts (REITs) who are dominant in the at-risk development of large-scale data centers for businesses. Those REITs, driven by the need for a 15-20 return rate on the capital expended on developing new data centers, will be reluctant to develop legacy air-cooled data centers and have the wrong product for the market in the five years’ timeframe.

While data centers have some levers, and they can adjust to stretch the capabilities of existing infrastructure, the same cannot be said for the Edge. Edge server design has always been constrained by power limitations and the need to operate in austere locations without the protections of the data center. The exponential growth in data at the Edge, combined with the need to deploy advanced compute architectures to process this data, is driving the need for advanced energy efficient cooling designs to meet this challenge.

Liquid Immersion Cooling at The Edge

As discussed earlier, air cooling is just one of many techniques which can be applied to address the cooling needs of compute architectures, and increasing server densities is stressing the limits of air as a primary cooling technique. Liquid immersion cooling has the advantage of supporting extreme high-density compute and doing it very efficiently from a PUE perspective.

With immersion cooling, the responsibility and control of the cooling process shifts from the individual server to immersion cooling platform. This simplifies the architecture and design of the server with the elimination of fault prone fans, large displacement heatsinks, and multi-RU server chassis designed to support airflow. The result is a solid-state server which operates at its optimal performance level and is more conducive to deployment in the diverse scenarios experienced at the Edge.

|

Server immersed in inert, dielectric two-phase fluid. |

When it comes to liquid immersion cooling, two-phase liquid immersion cooling (2PLIC) delivers the lowest PUEs for the highest compute densities in the market. 2PLIC leverages an inert dielectric (non-conductive) fluid to support heat transfer rates, which are orders of magnitude higher than air. When the server is immersed in the 2PLIC fluid, the fluid in contact with the hot processor changes state to a vapor and rapidly moves the heat away from the processor to the surface of the tank, where it is condensed back into a liquid. The condensation process provides a very efficient mechanism to move the heat out to a final heat rejection mechanism, such as a dry cooler or chiller system. TMGcore’s 2PLIC systems are designed to control the cycle with zero vapor loss and at normal atmospheric pressure. The relatively low boiling point of the dielectric fluid and the buoyancy of the bubbles create an isothermal bath that maintains all components on the server at an optimal temperature, with no need for pumps to circulate the fluid. |

|

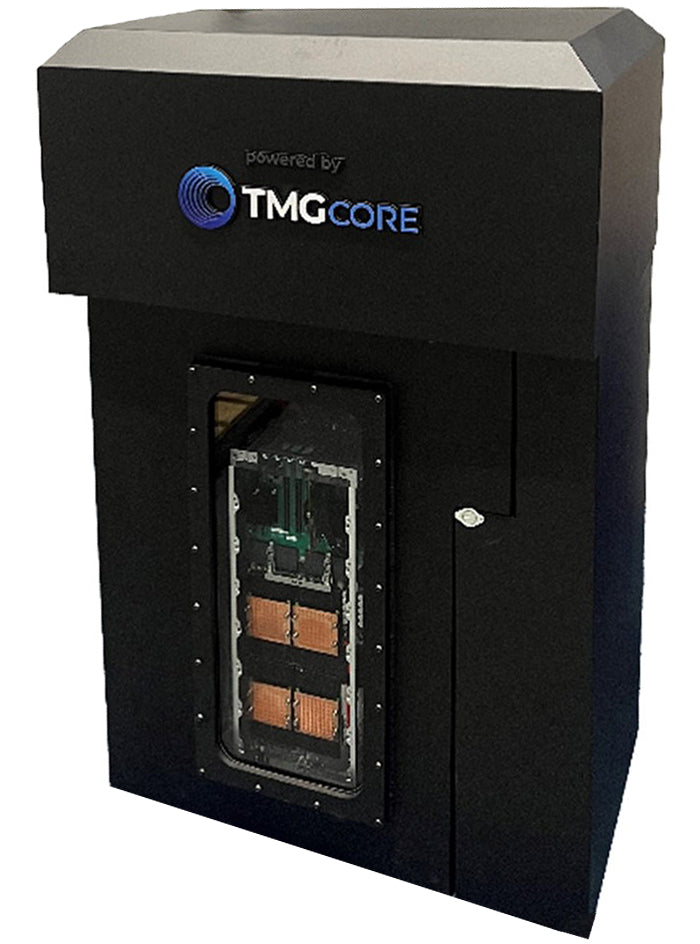

TMGcore’s EdgeBox delivers HPC at the Edge

|

TMGcore has developed an EdgeBox series of platforms to enable the delivery of advanced compute architectures at the edge, such as One Stop Systems' (OSS) Rigel Edge Supercomputer. The EdgeBox is designed to support all the lifecycle management needs of the 2PLIC environment with the ability to operate the system fully disconnected in an air gapped deployment, or leverage a cloud-based management capability to centrally monitor and manage many distributed edge nodes. The EdgeBox X (EB-X) supports up to 4 Rigel Edge Supercomputers, enabling the delivery of over 5 PetaFlops of TensorFlow AI/ML processing, all in a form factor less than 6 sq. ft. with a partial PUE of less than 1.03. The system is virtually silent, allowing the deployment adjacent to personnel without impeding their ability to carry out their normal functions. The EdgeBox X is designed to plug into customer-selected final heat dissipation solutions, and be configured with different power architectures, based on region or power density requirements. The linear nature of the cooling properties of the 2PLIC allows the scaling up - as well as down - of the physical platforms. This will enable small form-factor designs for OSS’ AI Transportables strategies, or larger deployments on seaborne platforms or regional data aggregation nodes. In summary, the IT market is at the infancy of a huge paradigm shift where leveraging TMGcore’s EdgeBox platforms with world-class advanced compute capabilities such as OSS’ Rigel platform, can be deployed to the point of data creation, enabling rapid decision-making with increased data fidelity for business owners and government entities. |

TMGcore EdgeBox X with OSS Rigel Edge Supercomputer |

|

Read more about our partnership with TMGCore.

Click the buttons below to share this blog post!

The character of modern warfare is being reshaped by data. Sensors, autonomy, electronic warfare, and AI-driven decision systems are now decisive advantages, but only if compute power can be deployed fast enough and close enough to the fight. This reality sits at the center of recent guidance from the Trump administration and Secretary of War Pete Hegseth, who has repeatedly emphasized that “speed wins; speed dominates” and that advanced compute must move “from the data center to the battlefield.”

OSS specializes in taking the latest commercial GPU, FPGA, NIC, and NVMe technologies, the same acceleration platforms driving hyperscale data centers, and delivering them in rugged, deployable systems purpose-built for U.S. military platforms. At a moment when the Department of War is prioritizing speed, adaptability, and commercial technology insertion, OSS sits at the intersection of performance, ruggedization, and rapid deployment.

Maritime dominance has long been a foundation of U.S. national security and allied stability. Control of the seas enables freedom of navigation, power projection, deterrence, and protection of global trade routes. As the maritime battlespace becomes increasingly contested, congested, and data-driven, dominance is no longer defined solely by the number of ships or missiles, but by the ability to sense, decide, and act faster than adversaries. Rugged High Performance Edge Compute (HPeC) solutions have become a decisive enabler of this advantage.

At the same time, senior Department of War leadership—including directives from the Secretary of War—has made clear that maintaining superiority requires rapid integration of advanced commercial technology into military platforms at the speed of need. Traditional acquisition timelines measured in years are no longer compatible with the pace of technological change or modern threats. Rugged HPeC solutions from One Stop Systems (OSS) directly addresses this challenge.

Initial design and prototype order valued at approximately $1.2 million

Integration of OSS hardware into prime contractor system further validates OSS capabilities for next-generation 360-degree vision and sensor processing solutions

ESCONDIDO, Calif., Jan. 07, 2026 (GLOBE NEWSWIRE) -- One Stop Systems, Inc. (OSS or the Company) (Nasdaq: OSS), a leader in rugged Enterprise Class compute for artificial intelligence (AI), machine learning (ML) and sensor processing at the edge, today announced it has received an approximately $1.2 million pre-production order from a new U.S. defense prime contractor for the design, development, and delivery of ruggedized integrated compute and visualization systems for U.S. Army combat vehicles.